RankBrain is Google’s artificial intelligence engine for ranking websites in its search results. It uses deep learning (also known as machine learning) to constantly improve itself autonomously. It is capable of semantic understanding, and has its own ideas about how to combine signals and interpret documents.

So how does it work? Paul Haahr, senior ranking engineer at Google, made this provocative/terrifying statement:

“We understand how it works, but not as much what it’s doing. It uses a lot of the stuff that we’ve published about deep learning.” – Paul Haahr

We know very little about the details, but there are at least two large parts of RankBrain. The first one is a very clever invention called “word vectors”.

Word vectors

One of the specific technologies behind this, for which Google holds a patent, is called Word2Vec. You may have heard of vectors in other context, such as vector graphics. As opposed to raster graphics, which holds information about each pixel in an image, vector graphics simply holds data about key points on the X/Y axis called “nodes”, and the relative distance between them, in order to draw paths. The advantage being that you can scale the image to any size without distortion.

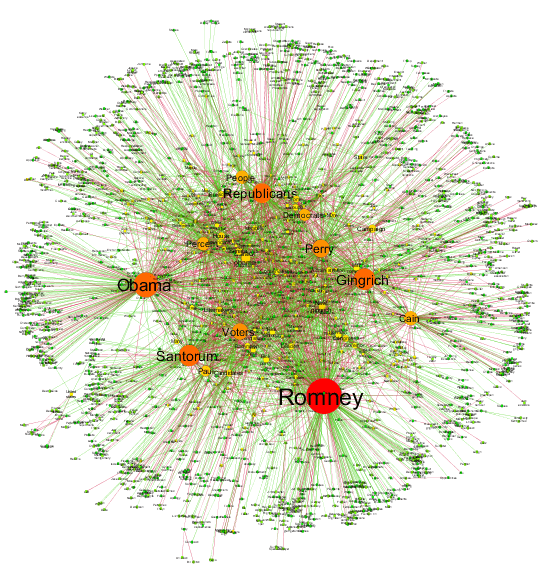

So, how do vectors work in language? One way to look at it is by imagining a mind map of every word in existence, with lines drawn in between any two words that are related and assigned a score depending on their level of relevance.

- Unknown words in sequences of words can be effectively predicted if the surrounding words are known

- Words surrounding a known word in a sequence of words can be effectively predicted

- Numerical representations of words in a vocabulary of words can be easily and effectively generated

- The numerical representations can reveal semantic and syntactic similarities and relationships between the words that they represent

Part of how this relevance is determined is by automatically analyzing huge amounts of data, and part of it is similar to how certain human intelligence tests work. For example: It will learn that kitten is to cat what puppy is to dog. It will know that prince is to king what princess is to queen, but also that prince is the opposite of princess, and that king is opposite of queen. At the same time, words such as “commoner” or “subject” may be considered the opposite of king.

If you search for “Stockholm Sweden Finland”, the most closely related term might be “Helsinki”, based on the sequence of words. Sometimes it works flawlessly, in a way that seems almost like magic. However, the weakness becomes apparent to the human mind when the words “strong”, “powerful” and “Paris” are equally distant from each other on the “map” (an example given by Google).

That is why it doesn’t just analyze individual words, but entire sentences, paragraphs, and documents to establish relevance between whole concepts – and it keeps learning as it goes along. It is also capable of sentiment analysis, so it can utilize reviews and testimonials as a ranking factor – though this is unconfirmed (as far as I know). It would be silly not to use it, though.

DeepDream

You may have seen some of these creepy, beautiful “dreams” generated by Google DeepDream. It is an image recognition software, which uses a convolutional neural network to discover patterns in photographs and other images. Google Image Search utilizes image recognition in order to improve the results, though it’s unclear if this is the only part of it.

It can recognize faces, furniture, tools, articles of clothing, identify species of flowers and many more amazing things. I would speculate that it even reads the text in images. The hallucinatory images are produced when the process is allowed to keep running, to find less and less obvious patterns. It may be similar to how humans discover patterns, especially faces, in many inanimate objects. In the example above, you can see it tries to find more dogs in the picture, since it already found one “doge”.

Are the sci-fi predictions coming true?

In his keynote speech at SMX West 2016, Behshad Behzadi, Director of Search at Google in Zürich spoke about the future of search. Behshad stated that when they are developing the technology of the future, they often look to science fiction for inspiration. Yes, Skynet is real.

To set the stage, he showed two clips. The first clip was from from the original “Star Trek” TV series, where Captain Kirk is verbally asking questions of his computer and receiving answers in real time. It was set 200 years in the future, but sems very similar to Siri and Google Now. Check it out:

The second clip was a trailer for the movie “Her”, in which the first fully AI-driven operating system is imagined – 20 years in the future. He then answers the question “Is this the future of search?” with a reserved: “Kind of.”

Considering how fast technology is evolving, we can only imagine…

Also check out part 1: How does Google work?

I couldnt have really asked for an even better blog. You happen to be available to provide excellent advice, going straight away to the point for easy understanding of your site visitors. You are undeniably a terrific professional in this field. Many thanks for remaining there humans like me.

You’re welcome, Lefty. I hope to provide lots more knowledge!